Erasing Individuality: The Uncanny Undertone of AI Writing Assistance

Erasing Individuality: The Uncanny Undertone of AI Writing Assistance

Christopher Lueg

In a LinkedIn post discussing official icons used by Artificial Intelligence (AI) pushing companies to depict their AIs (Clauss 2024), there was one line that caught my attention: “there’s a button in the lower left offering to rewrite my post, as though I’d ever want that.”

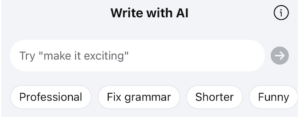

My off-the-cuff comment on that was that I find buttons like that offensive. It was a gut reaction but where did it come from? Was it the shape of the buttons? The colors they use? Is it only about AI products offered by specific companies or is it about particular contexts? After all, a “Write with AI” button does nothing more than offering a service, does it.

Before we delve into it (apparently a phrase that gives away AI authorship!) I’d like to acknowledge that the broad availability of Large Language Models (LLMs) and Generative AI (GenAI), the best known product being OpenAI’s ChatGPT, is widely seen as opportunity to streamline various office work activities. O’Rourke (2023) lists as suitable occupations Copywriter, Marketing Executive, Lawyer, Software Developer, and Researcher. O’Rourke does not fail to include the usual warning that GenAI outcomes need to be checked due to “potential errors in generative AI’s search results.” GenAI’s very brief history tells that GenAI users do not always check the results which has led in some instances to career-shattering consequences e.g. when losing a court case due to citing fake cases that were generated by OpenAI’s ChatGPT (Bohannon 2023) or when using factually incorrect allegations generated by Google’s Bard in a Senate inquiry submission (Sadler 2023).

—AI used to be weird. Now "sounds like a bot" is just shorthand for boring—

There is little doubt that GenAI can provide valuable services in certain areas e.g., by aiding and arguably empowering people who lack experience in say, writing business English or formatting CVs in specific ways, especially when applying for jobs that require submitting job applications online as is very common nowadays. In these cases, GenAI can be utilized to open doors that would otherwise remain closed, likely held shut by other AIs screening applications for proper template and keyword use. A special kind of an AI arms race.

My unease was related to the uncanny valley in writing which Lowe (2023) suggests to emerge when a text “attempts to imitate human-like storytelling or tone but doesn’t get it quite right. It’s a sensation of unease, provoked by something that almost feels familiar, but is slightly off.” Examples include employing idioms, phrases, or expressions that are used slightly incorrectly, or any other “slight but noticeable deviations from natural human writing can create a sense of disconnection and discomfort in the reader.” (ibid) Or, as Robertson (2024) puts it, “AI used to be weird. Now ‘sounds like a bot’ is just shorthand for boring.”

Perhaps inevitably, we got to witness an arms race between AI text generators trying to sound more human-like (assisted by suckerfish companies offering to ‘humanize’ AI generated texts) and people trying to uncover which pretend human texts were in fact generated by AI, e.g. by compiling list of words and phrases that supposedly unmask GenAI writing (e.g. Bastian 2024; Matsui 2024). Braue (2024) reports that OpenAI allegedly developed a tool that can accurately detect AI generated text but as they say, the proof is in the pudding. As a university lecturer, I have learned a lot about ways to render plagiarism detection algorithms ineffective and I’d be surprised if they wouldn’t also make AI detection harder.

Perhaps inevitably, we got to witness an arms race between AI text generators trying to sound more human-like (assisted by suckerfish companies offering to ‘humanize’ AI generated texts) and people trying to uncover which pretend human texts were in fact generated by AI, e.g. by compiling list of words and phrases that supposedly unmask GenAI writing (e.g. Bastian 2024; Matsui 2024). Braue (2024) reports that OpenAI allegedly developed a tool that can accurately detect AI generated text but as they say, the proof is in the pudding. As a university lecturer, I have learned a lot about ways to render plagiarism detection algorithms ineffective and I’d be surprised if they wouldn’t also make AI detection harder.

As a non-native speaker in the country where I live and work, I am very aware how easy it is to use idioms, phrases, or expressions incorrectly when conversing or writing in that language. What my above feeling of unease was really about though stems from an interest in the normalizing of covering up individual differences. Erasing individuality.

Earlier I suggested that the offering to re-write what one just wrote by clicking an AI button is just that: an offer to assist. But it is a double-edged sword since at least implicitly, the message is that one’s writing skills aren’t good enough to write in such a way that it is “Professional,” “Funny,” “Heartfelt,” or “Inspirational” (some of rewrite labels Facebook offered me when writing a new post; the outcomes were hilarious since Facebook’s AI clearly missed the irony in my post).

When I re-read Shew’s (2020) compelling take-down of technoableism it occurred to me that ableism really is about rejecting individual differences. Technoableism is a term that Shew coined to describe “a rhetoric of disability that at once talks about empowering disabled people through technologies while at the same time reinforcing ableist tropes about what body-minds are good to have and who counts as worthy” (p43).

In many cases, the design of the built environment (buildings, roads, tools including digital tools) embodies expectations as to how bodies must be able to perform. The built environment becomes inaccessible when a person’s abilities fail to meet these often unarticulated expectations. Common examples include venues that require people to be able to climb stairs due to lack of ramps, digital interfaces that require one to be sighted since there is no other option to access the information, and smoke alarms that require people to be able to hear. Shew (2020) argues it’s not an either-or situation: “we can both work to support individuals’ particular interests and needs and work to create a more accessible world for all” while warning against “[continuing] to design technologies that reinscribe the “cure” or normalization of individual disabled bodies and minds instead of making worlds more conducive to our existence” (p49-50).

Earlier I mentioned that GenAI can empower people who lack experience in say, writing business English or formatting CVs in specific ways. Similarly, GenAI can provide valuable support in a range of accessibility related scenarios including making it easier for people with physical or cognitive disabilities to compose texts.

As a society, we need to be very careful regarding the expectations we create. We shouldn’t expect every document to be “AI finished” (“AI finishing” replacing the much more mundane spell check expectation) thereby erasing individual writing styles. At the same time, we must not discount texts just because they appear be “AI finished.” There might be very good reasons as to why the author used the help of AI.

References

Bastian, M. (2024). Reddit users compile list of words and phrases that unmask ChatGPT’s writing style. The Decoder May 1, 2024. https://the-decoder.com/reddit-users-compile-list-of-words-and-phrases-that-unmask-chatgpts-writing-style/

Bohannon, M. (2023). Lawyer Used ChatGPT in Court—And Cited Fake Cases. A Judge Is Considering Sanctions. Forbes June 8, 2023. https://www.forbes.com/sites/mollybohannon/2023/06/08/lawyer-used-chatgpt-in-court-and-cited-fake-cases-a-judge-is-considering-sanctions/

Braue, D. (2024) OpenAI won’t release tool to find AI cheats. Information Age. Aug 08 2024. ACS. https://ia.acs.org.au/article/2024/openai-won-t-release-tool-to-find-ai-cheats.html

Clauss, J. (2024). https://www.linkedin.com/posts/jclauss_big-tech-is-struggling-to-figure-out-how-activity-7227335709953564674-Y1Pt

Lowe, R. (2023). Uncanny Valley in Writing: 8 Powerful Aspects Explained. https://thewritingking.com/uncanny-valley-in-writing/

Matsui, K. (2024). Delving into PubMed Records: Some Terms in Medical Writing Have Drastically Changed after the Arrival of ChatGPT. medRxiv 2024.05.14.24307373; doi: https://doi.org/10.1101/2024.05.14.24307373

Mori, M. (2012) The Uncanny Valley. First English translation authorized by Mori. IEEE Spectrum. 12 JUN 2012. https://spectrum.ieee.org/the-uncanny-valley

O’Rourke, T. (2023) Work in one of these jobs? ChatGPT can help! Hays Career Advice. https://www.hays.com/career-advice/article/chatgpt-help-these-jobs

Robertson, A. (2024). You sound like a bot. The Verge Feb 16, 2024. https://www.theverge.com/24067999/ai-bot-chatgpt-chatbot-dungeon

Sadler, D. (2023). Australian academics caught in generative AI scandal. Information Age. Nov 06, 2023. ACS. https://ia.acs.org.au/article/2023/australian-academics-caught-in-generative-ai-scandal.html

Shew, A. (2020). Ableism, Technoableism, and Future AI. IEEE Technology and Society Magazine. March 2020. DOI: 10.1109/MTS.2020.2967492

Cite this article in APA as: Lueg, C. Erasing individuality: The uncanny undertone of AI writing assistance. (2024, August 27). Information Matters, Vol. 4, Issue 8. https://informationmatters.org/2024/08/erasing-individuality-the-uncanny-undertone-of-ai-writing-assistance-2/

Author

-

Christopher Lueg is a professor in the School of Information Sciences at the University of Illinois Urbana-Champaign. Internationally recognized for his research in human computer interaction and information behavior, Lueg has a special interest in embodiment—the view that perception, action, and cognition are intrinsically linked—and what it means when designing for others. Prior to joining the faculty at Illinois, Lueg served as professor of medical informatics at the Bern University of Applied Sciences in Biel/Bienne, Switzerland. He spent almost twenty years as a professor in Australia teaching at the University of Technology, Sydney; Charles Darwin University; and the University of Tasmania, where he co-directed two of the university's research themes, Data, Knowledge and Decisions (DKD) and Creativity, Culture, Society (CCS).

View all posts