Is the World Different Depending on Whose AI Is Looking at It? Comparing Image Recognition Services for Social Science Research

Is the World Different Depending on Whose AI Is Looking at It? Comparing Image Recognition Services for Social Science Research

Anton Berg & Matti Nelimarkka

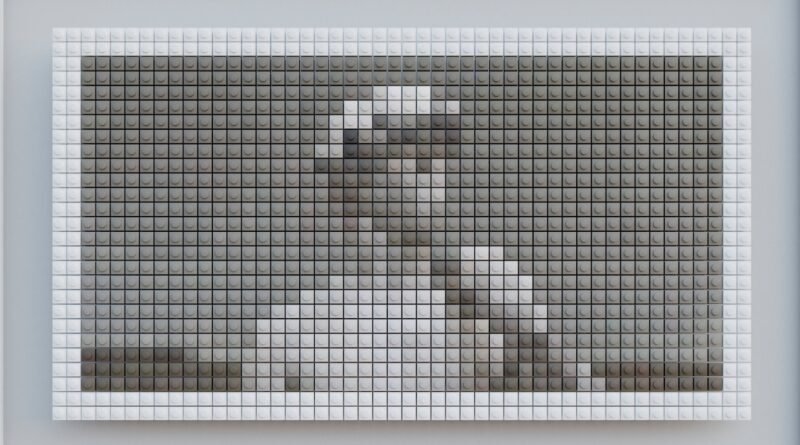

Images and visual material are important formats in our media environment and therefore are a great source of data for researchers interested in social, political, and cultural phenomena. However, traditional qualitative approaches to study visual content do not scale for large datasets now available through social media services, news media etc. Therefore, computational methods, such as machine learning show potential for these. Among these are automated image recognition services, which provide labels for an image (Figure 1). They can be used for content analysis, however, previous research has been extremely cautious due to differences across different services, thus creating threats to reliability (see eg. Webb-Williams, 2021).

|

Google Vision |

We examined the reliability of image recognition services by conducting a cross-comparison across three popular image recognition services: Google Vision AI, Microsoft Azure Computer Vision and AWS Rekognition. We also varied the production quality (from user-taken images to professionally taken images) and thematic areas (politics, news media, social media images) to examine if there were some datasets where services had higher reliability. We were able to systematically replicate previous findings: image recognition services do not agree on what they see.

—image recognition services do not agree on what they see—

However, we are cautious to claim this would mean that these services are useless when examining visual materials. Rather, their unreliability should be accounted for in the data analysis process. Intuitively, if one service detects a cat in the image and another a tiger, it seems that both see a feline animal, but disagree if it was a big cat or a domestic cat. If the other service would have seen a penguin, there would have been higher disagreement between the services, or if the other service would have seen a car, the object is clearly unclear for the tested visual recognition systems.

Building on this intuition, we propose a cross-service label agreement (COSLAB) measurement to examine the reliability of these services. COSLAB uses word embedding models to evaluate the closeness across two labels using their cosine similarities. The code is available under MIT license at GitHub (link to https://github.com/uh-soco/coslab-core ).

Our analysis shows that even with this looser definition of label similarity—where we allow for closeby matches—image recognition services differ in statistically significant level. We show that these differences occur across production quality and thematic areas, suggesting that the differences are systematic.

However, we are optimistic on the use of image recognition systems for social science use due to their potential for open-ended exploratory search. However, additional work to explore the reliability of the labels is merited. We suggests two alternative strategies for applying image recognition services:

- use several image recognition services and evaluate the label accuracies using COSLAB process and drop all labels where the accuracy is below a given threshold. This approach is particularly suitable if there is an aim to quantify images, as the process addresses threats to measurement errors.

- for more exploratory uses, see different image recognition systems as different perspectives on images and retain all labels with the understanding that they do differ and these differences do limit what can be said based on the analysis.

Cite this article in APA as: Berg, A. & Nelimarkka, M. Is the world different depending on whose AI is looking at it? Comparing image recognition services for social science research. (2023, August 31). Information Matters, Vol. 3, Issue 8. https://informationmatters.org/2023/08/is-the-world-different-depending-on-whose-ai-is-looking-at-it-comparing-image-recognition-services-for-social-science-research/

Authors

-

Anton Berg (MSc, MRs) is a doctoral researcher in the datafication research programme at the Institute for Humanities and Social Sciences (HSSH) at the University of Helsinki. Berg has a background in religious studies, digital humanities and cognitive science and specialises in issues related to recognition technologies, datafication and artificial intelligence.

View all posts -