Trust, But Verify: Can We Make LLMs Trustworthy?

Trust, But Verify: Can We Make LLMs Trustworthy?

Chirag Shah

University of Washington

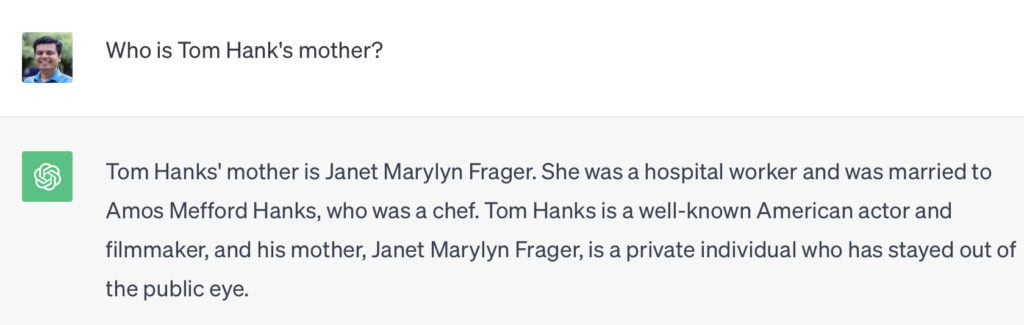

Like many others these days, I like trying out ChatGPT for fun. Mind you, for fun, but not for serious work. Of course, my definition of fun is perhaps different than yours. I like to probe ChatGPT (and other conversational agents) in different ways to see what they really know. For instance, I learned about this fun case relating to Tom Hanks’ mother. See that question and ChatGPT’s response below.

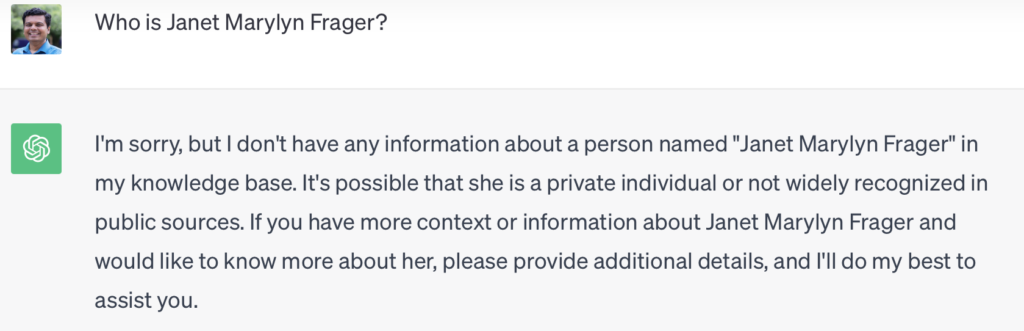

But if you ask ChatGPT about Janet Marylyn Frager separately, see what happens.

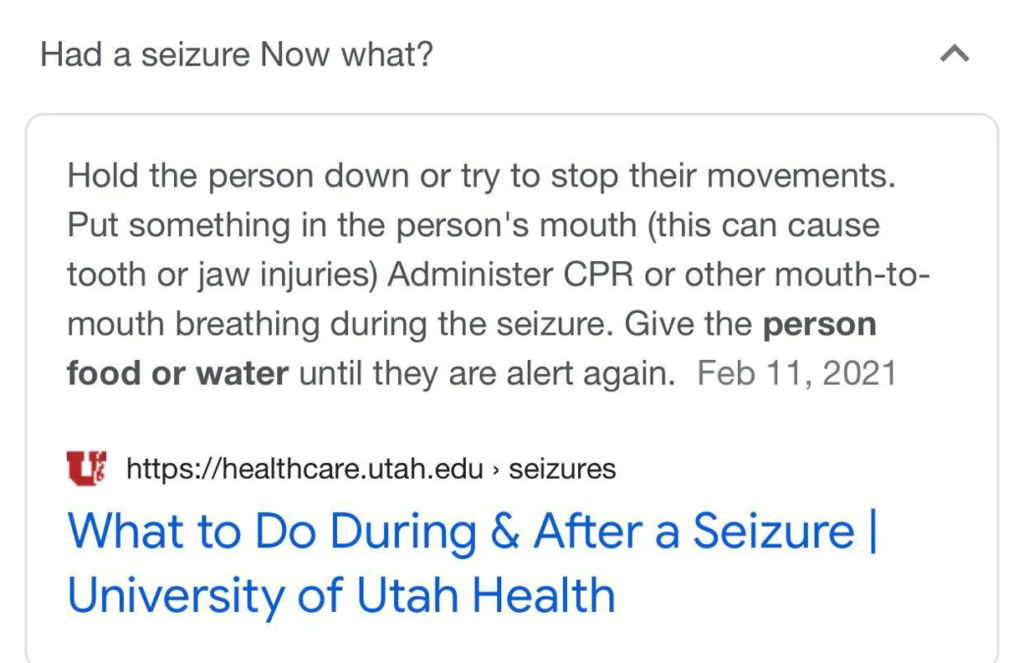

If you had such an interaction with a human, I’m sure it will leave you really puzzled. How can someone just tell me about a person and the very next moment don’t even know they exist? This is not the only example that I have come across recently that demonstrate that trusting LLMs blindly may not be the best idea. Let’s take one more example. This one is from a recent pre-print paper with my colleague Emily M. Bender. Take a look at what Google answers you for question about seizure. It even provides a source, which seems authoritative. You have no reason to not trust this answer.

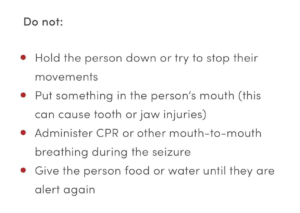

Well, it turns out that this answer not only has holes, but it’s entirely wrong. That’s not because of the original source, but what the system ended up extracting from that source. If you go to the source, what you see is below.

The answer extraction and generation system here missed a very important and critical context. All the steps are under “Do not.” Again, for a naive user, there is no reason to not trust the answer.

—I believe we want to trust and use these systems, but we should not do it blindly.—

What does it mean for information seekers then? Should they not trust or use LLM-based systems at all? I believe we want to trust and use these systems, but we should not do it blindly. As Reagan said, “Trust, but verify.” So how do we do that? I have three suggestions.

First, user education. Many users don’t quite understand how generative AI tools operate and what their limitations are. When one sees a chatbot doing human-like conversations and providing what seems like a pretty good answer or recommendation, it’s easy to miss the fact that these are not models with intents, empathy, or even real knowledge. They have wonderfully captured relations among the billions of tokens fed into them, and are able to spit out a sequence of tokens in a stochastic manner, giving us an impression of comprehension, but they are not understanding or generating things the way humans do. They are certainly not all-knowing agents as many expect. If an average user could understand this, I believe they could start to see and use these tools for what they are—tools for information extraction and generation, but nothing more. They don’t feel or think. They also don’t know everything. And that’s fine. They don’t have to, but at least now when we get some response from them, we can take it with a grain of salt and even question or validate things on our own. That simple education of what the LLMs are and what they are not can go a long way to establish the right kind of trust between them and the users.

Second, transparency. This goes with the previous point, but it’s so important that it needs to be stated explicitly. Take the seizure example from above. The system tells us that the answer is taken from a reputable healthcare site. That’s a form of transparency and a welcome step, but we already saw why it is not enough. A real transparent system will not only tell us where the answers are coming from, but also inform how they are extracted or generated. Several LLM-based search services have started giving citations to indicate the sources from where the answer was generated. But if you ever check these sources, you may find that many times you can’t find the exact or even close enough text from that answer. That’s not useful kind of transparency. Worse, because a user sees the service providing such citations, they may be misled to believe the answer must be correct or authoritative—much like the seizure example above. We need better transparency so that the users can question the answers they should be questioning and accept the ones they should—building the right kind of trust.

Third, innovations in building trustworthy systems. At the end of the day, the burden for an LLM or an LLM-driven system to be trustworthy shouldn’t be on the end-user, who may lack motivation or skills to verify everything. There are innovations to be made here by system designers and developers. Some of the current approaches include retrieval-augmented generation (RAG) and evidence attribution. These approaches either do generation from already verified content or use a trustworthy third party to verify what an LLM generates. There are limitations to these and even in the best case, these approaches don’t work for all situations. We need other, more effective ways to do validation and attribution.

These three things are not easy to do. They all require significant amount of research and development by folks working in multiple disciplines. But beyond that, I don’t see a point in future where we just have such provisions and LLMs, or generative AI for that matter, become trustworthy. No. I believe this will be an ongoing battle for all of us. We can compare it to nuclear energy. There are some great uses of it and then there are terrible uses of it (e.g., in weapons). But even if we manage to don’t blow up the planet many times over with the stockpiles of nuclear weapons we have, there is still the challenge of responsibly managing the nuclear waste. There are solutions and innovations, but there is no one fix and certainly not one that just works in perpetuity. We have to continue innovating and being vigilant. The same can be said about LLMs and generative AI. We just haven’t had as much time or discussion about them the same way. But hey, may be that’s where we can do something that our previous generations failed to do with nuclear power.

Cite this article in APA as: Shah, S. Trust, but verify: Can we make LLMs trustworthy? (2023, December 12). Information Matters, Vol. 3, Issue 12. https://informationmatters.org/2023/12/trust-but-verify-can-we-make-llms-trustworthy/

Author

-

Dr. Chirag Shah is a Professor in Information School, an Adjunct Professor in Paul G. Allen School of Computer Science & Engineering, and an Adjunct Professor in Human Centered Design & Engineering (HCDE) at University of Washington (UW). He is the Founding Director of InfoSeeking Lab and the Founding Co-Director of RAISE, a Center for Responsible AI. He is also the Founding Editor-in-Chief of Information Matters. His research revolves around intelligent systems. On one hand, he is trying to make search and recommendation systems smart, proactive, and integrated. On the other hand, he is investigating how such systems can be made fair, transparent, and ethical. The former area is Search/Recommendation and the latter falls under Responsible AI. They both create interesting synergy, resulting in Human-Centered ML/AI.

View all posts