AI and the Future of Information Access

AI and the Future of Information Access

Chirag Shah

AI has been impacting the area of information access in significant ways. Some of these ways are welcome changes that are decades in coming, whereas some others are problematic and even harmful. The question is can we minimize those harms enough and leverage the benefits of AI to charge a new era with even wider, deeper, and fairer access to information? To answer this, we need honesty and not hype about these tools and technologies; being able to ask tough questions and be prepared for tough answers. For example, we could ask what assurance we have that an LLM (large language model) has captured diverse enough, current enough, and comprehensive enough content in a given domain for it to be a reliable and trustworthy source of information. We could and perhaps should ask if an image generation tool is respecting privacy and providing attributions as appropriate. And we could ask if a user, and that may be even us, really understands what a chatbot is capable of and what it’s not. Finally, before we get too excited about AGI (artificial general intelligence) or even just a smarter AI tool, we should ask why we need it and how we will ensure a level of equity, fairness, and accountability that does not go against our collective societal values. These are just some of those tough questions and we have to understand that the answers many not come easy or positive. But this is one place where “move fast and break” or “ask for forgiveness instead of permission” is not a good idea for many of us in a longer term.

—How do we ensure that we benefit from AI?—

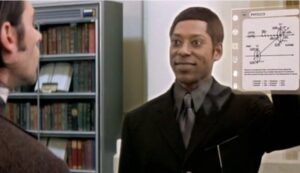

About 20 years ago, movie The Time Machine, based on the classic book by H. G. Wells by the same name, came out. It features a hologram librarian named Vox 114 who is possesses all the world’s knowledge and can interact with a user in natural language and gestures. This is one possible vision of our future with information access and AI. Another possibility was envisioned by Swedish philosopher Nick Bostrom around the same time 20 years ago. Bostrom presents a thought experiment in which a paperclip manufacturing AI system ends up destroying the world because that’s how it could keep optimizing the production of paperclips. Depending on your inclinations, one or both of these may seem far-fetched, fantastic, or frivolous, but they serve the purpose of showing two extremes that are already taking shape in our psyche today. Many think that the current information access systems are heading toward Vox 114, where an all-knowing AI will be all we will need to find, use, and understand any information we need. But there are also many who believe that the same kind of AI development has the potential to destroy us like that paperclip maximizer. The truth, I believe, is somewhere in the middle. Rather than focusing too much on either extreme, I suggest we ask a more practical question—how do we ensure that we benefit from AI, with all it has to offer, while we minimize the risks?

There is no one answer for this, but I know we must start somewhere. We can start by understanding our past and assessing our present. We know that self-regulation is not something we have done well, whether it is technology or energy. We understand that technological and societal advancements need to happen with everyone’s participation and not through the views of a select few. We can project that the fundamental human values such as safety, privacy, equality, benevolence, and universalism will continue guiding us and they need to be instrumental in any AI that we build. With these in mind, I propose the following three ethos:

- Conformity: AI must understand and adhere to the accepted human values and norms.

- Consultation: To resolve or codify any value tensions, AI must consult humans.

- Collaboration: AI must be in collaboration mode by default and only move to take control with the permission of the stakeholders.

I propose we ensure that any AI that we build is coded with the currently accepted human values and norms (conformity). As the AI starts operating and finds tensions among any values, instead of making decisions on its own, it explicitly consults humans (consultation), thus avoiding any value judgments that go against our desired outcome or learning ill-intended behaviors. Finally, if and when an AI system replaces a human or takes control, it must do so with explicit permission of the stakeholders (users, policymakers, designers as appropriate), and relinquish that control when desired by the same stakeholders.

Cite this article in APA as: Shah, C. AI and the future of information access. (2023, October 25). Information Matters, Vol. 3, Issue 10. https://informationmatters.org/2023/10/ai-and-the-future-of-information-access/

Author

-

Dr. Chirag Shah is a Professor in Information School, an Adjunct Professor in Paul G. Allen School of Computer Science & Engineering, and an Adjunct Professor in Human Centered Design & Engineering (HCDE) at University of Washington (UW). He is the Founding Director of InfoSeeking Lab and the Founding Co-Director of RAISE, a Center for Responsible AI. He is also the Founding Editor-in-Chief of Information Matters. His research revolves around intelligent systems. On one hand, he is trying to make search and recommendation systems smart, proactive, and integrated. On the other hand, he is investigating how such systems can be made fair, transparent, and ethical. The former area is Search/Recommendation and the latter falls under Responsible AI. They both create interesting synergy, resulting in Human-Centered ML/AI.

View all posts